A Practical Crash Course to Computer Architecture

In university, I took a course on computer architecture. But in hindsight, I think:

- It made things wayyyy more complicated than necessary

- Went into depth in all the wrong places, it was a lot of theory and not enough application, making it difficult to connect the dots when trying to actually use the information for something

So after a little bit of researching and thinking, I thought I would share what I now understand of the basics of computer architecture in a way that I can actually apply it for something.

Tackling computer architecture in a single post is a pretty formidable task..or so you would think but really it is not all that bad. The premise of this post is going to be centered around Apple- it’s computers, processors (spotlight on the M1 chip), etc.

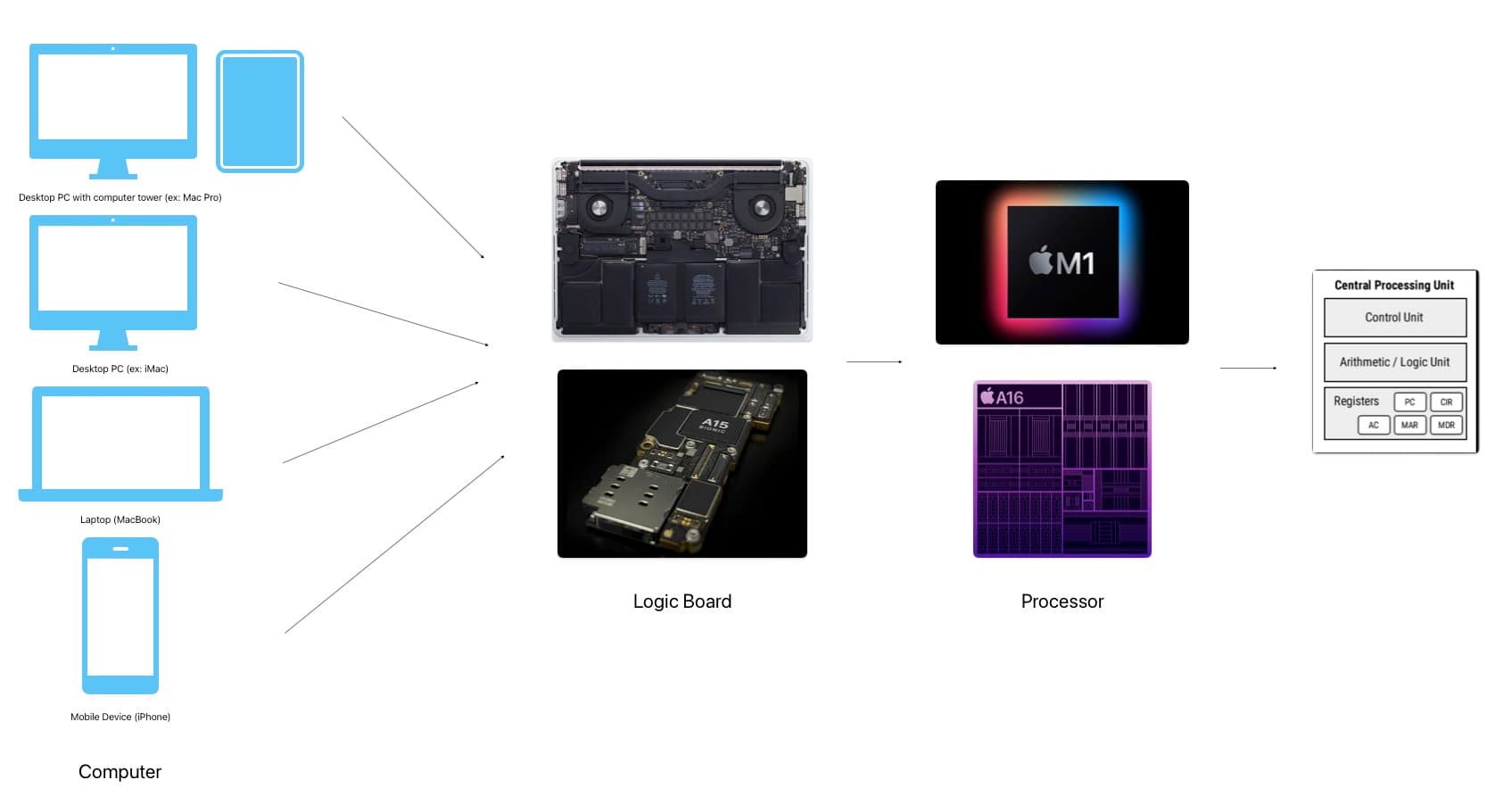

So let’s consider a computer…or a couple. There’s desktop PC’s, such as ones with the tower (Mac Pro) and ones without (iMac) There’s laptops (MacBook Pro, MacBook Air) There’s mobile devices (iPhone)

Among all these computing devices, whether mobile, portable, or stationary, they all have a motherboard/logic board structure that houses a number of key components that enable them to do all the amazing computer magic they do. One of the key components being the processor.

Now this is when things get a little…ambiguous. This processor…some know it as the CPU, some call it a processor chip, others a microprocessor. I guess use whatever term floats your boat but the jazz hands phenomenon around this term makes it quite confusing to understand what's going on. So, in this post this entity will be explicitly referred to as the processor and the CPU term will be reserved for another, more specialized entity that..is a part of the processor that I will discuss in a little.

Ok, going back to this processor…there’s probably a couple popular ones you are familiar with. Lately the Apple Silicon processors have been all the rage, and in my opinion rightfully so. This includes the A16 Bionic processor in their latest release in the A Series for iPhones and the M2 (and its predecessor M1 that was quite the disruptor to the industry in 2020) in the M Series for PCs. Other examples include Intel’s i3, i5, i7 processors as well.

So there’s quite a few processors, but what's their differences? their impact? Why should you gaf?? Well, for starters this page (opens in a new tab) is gonna make a lot more sense, so next time you are buying a new laptop, phone, or whatever latest and coolest device you know what you are getting yourself into and not paying for more than what you will ever use.

Let's take a minute to debrief processors, their history, and implications…

Demystifying Processors

There’s 2 main kinds of processors: x86 vs. ARM What’s the diff, you ask?

x86:

- Primarily manufactured by semiconductor company Intel

- Originally supported 16 bit architecture, then evolved to 32 bit, then eventually 64 bit (x86-64, by AMD who adapted the x86 part from Intel)

- Primarily uses CISC architecture

ARM:

- Originated from UK semiconductor company ARM who is behind the design of ARM processors but outsources the actual manufacturing of the processors to other companies

- Enables companies to adapt a general ARM processor design and then customize it to their specific needs (ex: what Apple did/does, although Apple also used the Intel x86 chips for a while but hold tight)

- Inspired by RISC philosophy and making a simpler CPU

- Originally 32 bit, evolved to dominantly 64 bit

Okay…so the key difference is CISC vs. RISC, what’s the deal here? Definetely would recommend checking out this post (opens in a new tab) from Stanford, it's the best article I have seen yet on explaining this topic and I'm referencing the example they discuss below!

CISC- Complex Instruction Set Computer Architecture

- Instruction set == group of instructions

- Each instruction is…well, complex

- For example, a command to multiply would look something like MULT.

- The handling of the underlying complexity of this command is baked into the hardware of the processor because the goal of CISC is to keep the lines of Assembly code generated as minimal as possible because this means less RAM is required to run the program (at one point in history, RAM was pricey)

- So for a compiler to transform the content of a high level programming language to Assembly code for a x86 processor based system (or any system that relies on CISC) the work is relatively minimal, so less pressure on software, more on hardware

- CISC instructions → more complex → can take multiple clock cycles to execute

RISC- Reduced Instruction Set Computer Architecture

- CISC….but simpler

- A CISC MULT Command would look something like

- LOAD

- LOAD

- PROD

- STORE

- 1 line of assembly in CISC == 4 in RISC

- More work for compiler to to transform the high level language program to RISC compatible instructions

- But..less pressure for hardware. Less range of instructions to recognize and be responsible for

- Since instructions are so simple, each RISC instruction == executes in 1 clock cycle

- The reaction to this architecture when originally introduced in 1980, was like “okay, this is nice…i guess…” but no one really cared. The market was focused on PCs at the time, so the remarkable power efficiency that RISC enabled was not the most sought after feature, so not many were developing for the RISC architecture either…but this all drastically changed when portable devices like mobile phones were introduced when power consumption became a significant concern and RISC proved to be a valuable solution. Since there is less pressure on hardware, and the programs executing on mobile devices are pre-compiled, RISC enables program execution to thrive on mobile devices and eases the issue of program execution completely draining the battery life

Though the RISC has more lines of assembly code to execute, because they are simpler and only take 1 clock cycle per instruction, the MULT command executes in a comparable manner between the 2 architectures. It’s a matter of considering what entity should face the pressure when executing the program? the software (in RISC) or hardware (in CISC)

Wait…what’s with all this bit talk?

So a bit is an entity of data…a 0 or 1. When we say a system is 32-bit compatible, we are talking about the number of possible addresses available to store data. So, 32-bit == 2^32 possible addresses available, max usage of 4GB RAM 64-bit == 2^64 possible addresses But there are tiers to determine how much memory your executing program has access to, a hierarchy of bit establishing entities in your system. So first, the architecture supported by the processor, then the OS, and finally the executing program. If the processor is 32-bit, all following entities in the hierarchy MUST be 32 bit or less. However, if the processor is 64 bit, it still has the capacity to run a 32 bit OS or a 32 bit program, but the executing sub-entity can only utilize the 32 bit capacity of addresses rather than the full 64 bit available and supported by the processor. There’s a trickle down effect of bit capacity and support…Now systems predominantly support 64 bit architecture.

x86 vs ARM, CISC vs RISC, 32 bit vs 64 bit, whew! All of this is great, but how has this all played out in history? Going back to our lovely case study subject of the day: Apple.

So Apple now, as of 2023, manages all their processor production for devices in-house.

Check out this great intro video (opens in a new tab) to get up to speed (also using refs from this video!)

Apple & PC processors

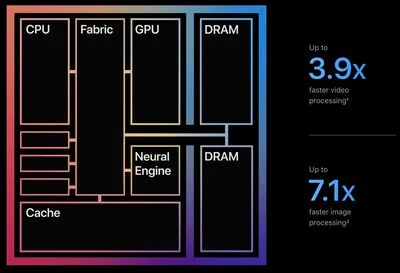

For most of Apple’s line of PCs, their choice of processors was Intel’s x86 architecture (though before they had also incorporated processors from Motorola and PowerPC). Though the CISC architecture was complex and power intensive, it proved to be a decent option for PCs as Intel was able to invest and keep their tech afloat with the demand of the industry. But that all changed in 2020, as Apple broke off their deal with Intel and shifted their choice of processor to the M1 chip which was an ARM based SoC that radically used less power and could deliver efficient results. The M1 chip was a disruptor in the industry as it showed the world that ARM processors could be capable of powering PCs. Because the M1 is a SoC (system on chip), the CPU, GPU, among other components are all on a single entity now rather than being dispersed across the motherboard/logic board and thus eliminating unnecessary latency during execution between components. And now such shared components also had a shared access to a unified memory platform. And because M1 is based on an ARM processor, it now utilizes the RISC architecture. Since there is less pressure on the hardware and components are more tightly coupled, this makes the M1 chip faster, more efficient, and more power-smart.

Apple & mobile processors

Apple actually originally reached out to Intel out of interest for them to manufacture processors for the iPhone, but Intel’s CEO declined the offer as he did not think the iPhone would be all that successful…yikes. So Apple turned to Samsung who ended up manufacturing the first processor for the iPhone was was a 32 bit ARM based processor and then eventually Apple Silicon emerged with the release of the A4 chip in 2010 with the first Apple Silicon SoC and then a few years later with the release of A7 which was the first 64 bit Apple SoC.

So, what is a CPU?

Ok soooo that’s basically processors in a nutshell. But remember when I mentioned the whole processor vs. CPU ambiguity issue. So for example, the M1 chip is a processor. What the M1 chip consists of, especially because it follows the System on a Chip design, is a variety of powerful components…things like the CPU, GPU, Neural Engine, DRAM, etc. Wait but don’t some people call the M1 chip a CPU? But M1 contains a CPU? So a CPU contains a CPU? What is it? a CPU or a processor? Exactly, the ambiguity in question. But the answer is that the term is generally used interchangeably especially generally speaking, because modern day CPUs are microprocessors. The crux of the situation is because of how technology has evovled. And then since the CPU is the entirety of the of the chip, within it lays subentities known as processor cores that execute the processing work.

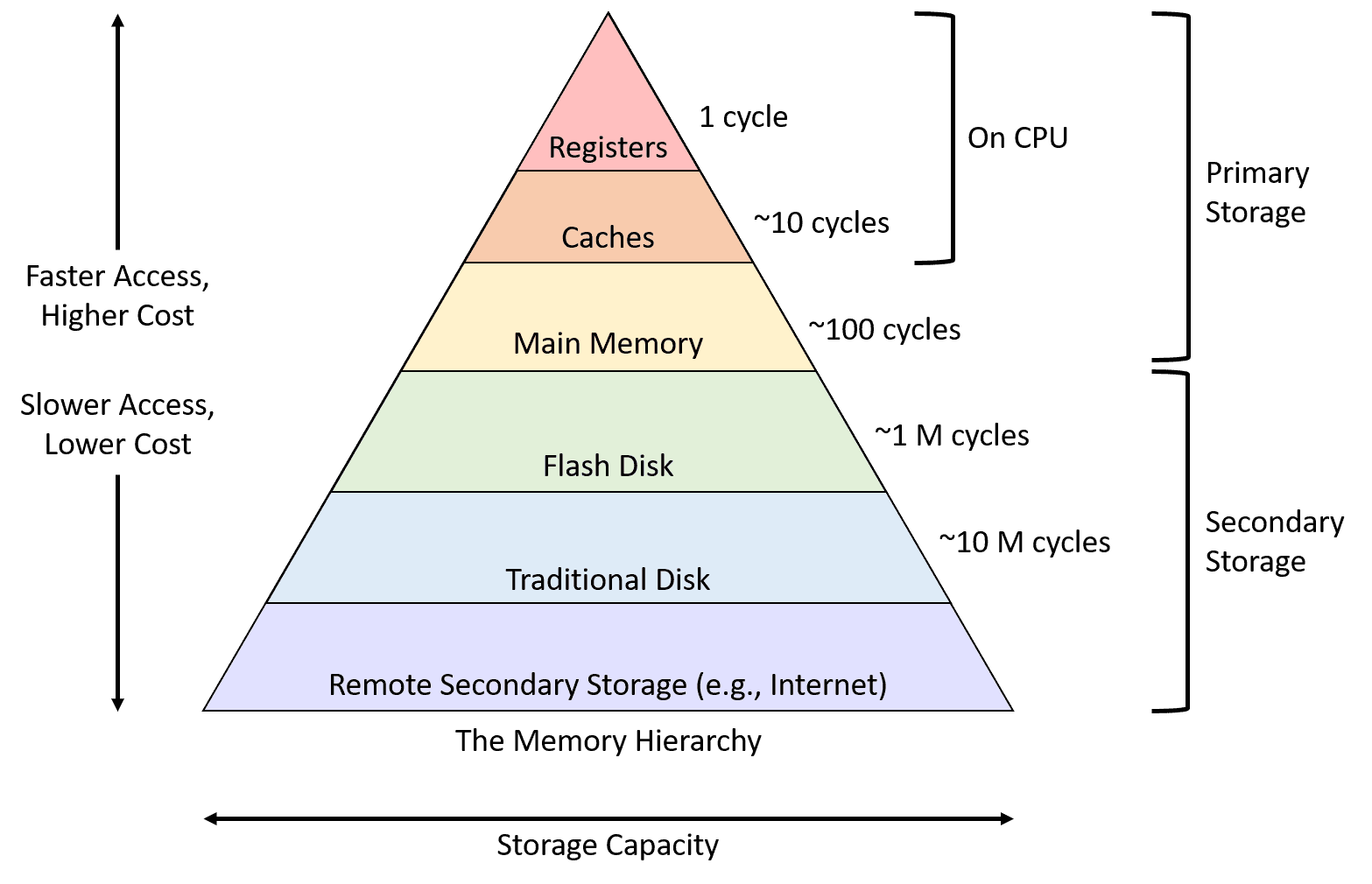

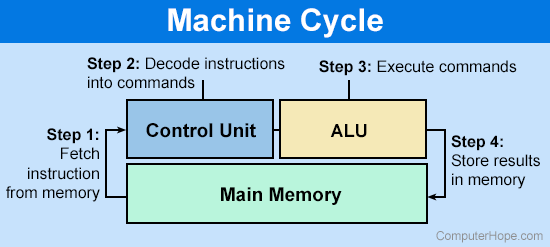

The CPU is known to be the brains of the computer. In very technical terms, the way I view it is the CPU is the entity that undergoes the Instruction cycle aka the Fetch-Decode-Execute cycle. So under this very technical definition, the CPU is a part of the processor chip that consists of components like the control unit, arithmetic logic unit (ALU), registers (like the program counter), and clock. And in very simple terms, the CPU sole responsibility is to follow the aforementioned cycle.. it fetches a instruction from memory (which there are tiers…there’s always tiers to everything: the registers in the CPU, cache (levels here too), RAM (main memory), and then secondary storage entities like in the SSD, etc but all of this has to trickle up to the CPU register for processing, the further down the list, the longer it takes to access).

After fetching the instruction, it decodes it and translates the instruction into an interpretable command. So the instruction is fetched and prepped. Then during execution, the command is executed and the result is stored or handled as needed.

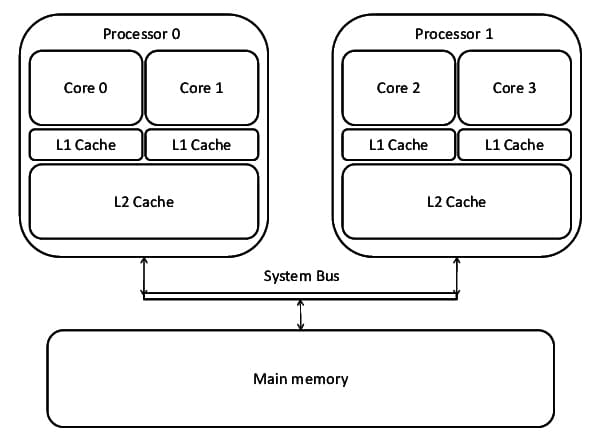

Now, what we discussed is the behavior in a system with a single core but a processor can have more than 1 core. It can be a dual-core, quad-core, etc-core processor. And this means there are multiple entities that can undergo the Fetch-Decode-Execute cycle at a time…aka multiple instructions can be executed in parallel, woo multitasking ftw!

Each core has its own memory, access to shared memory, and this can boost the performance and capabilities of a system significantly though a typical consumer using a laptop for web surfing or whatever may not ever use the capacity of more than a few cores (like 4 max), but for tasks like graphics rendering and mass media handling these cores can be of great benefit.

The story doesn’t just simply end because the cores are present, the software in use should also take advantage of the cores present and parallelize tasks appropriately to take full advantage of this feature in the system. No matter what great features are present on the underlying system, for an end user to reap the benefits, the intermediary software pieces must also incentivize these features appropriately otherwise it's a missed opportunity (think about the 32 bit vs 64 bit trickle situation).

This leads me to multitasking..so the presence of multiple cores in a processor sets the stage nicely for multitasking efforts. The cores are the hardware part of the picture, whereas the threads released by each core are on the software side (that will be in my OS post, stay tuned..) and this will paint the picture for multithreading in applications.

Researching all of this was interesting. A lot of this I was familiar with, but this was a matter of telling a story and connecting the dots with all the pieces rather than looking at each piece separately. Like what Apple did with M1? ok I’m done lol.

© Haritha Mohan.RSS